#install mongodb docker container

Explore tagged Tumblr posts

Text

#youtube#video#codeonedigest#microservices#microservice#docker#springboot#spring boot#mongodb configuration#mongodb docker install#spring boot mongodb#mongodb compass#mongodb java#mongodb#dockercontainers#docker image#docker container#docker tutorial#dockerfile#spring boot microservices#java microservice#microservice tutorial

0 notes

Text

Docker Tutorial for Beginners: Learn Docker Step by Step

What is Docker?

Docker is an open-source platform that enables developers to automate the deployment of applications inside lightweight, portable containers. These containers include everything the application needs to run—code, runtime, system tools, libraries, and settings—so that it can work reliably in any environment.

Before Docker, developers faced the age-old problem: “It works on my machine!” Docker solves this by providing a consistent runtime environment across development, testing, and production.

Why Learn Docker?

Docker is used by organizations of all sizes to simplify software delivery and improve scalability. As more companies shift to microservices, cloud computing, and DevOps practices, Docker has become a must-have skill. Learning Docker helps you:

Package applications quickly and consistently

Deploy apps across different environments with confidence

Reduce system conflicts and configuration issues

Improve collaboration between development and operations teams

Work more effectively with modern cloud platforms like AWS, Azure, and GCP

Who Is This Docker Tutorial For?

This Docker tutorial is designed for absolute beginners. Whether you're a developer, system administrator, QA engineer, or DevOps enthusiast, you’ll find step-by-step instructions to help you:

Understand the basics of Docker

Install Docker on your machine

Create and manage Docker containers

Build custom Docker images

Use Docker commands and best practices

No prior knowledge of containers is required, but basic familiarity with the command line and a programming language (like Python, Java, or Node.js) will be helpful.

What You Will Learn: Step-by-Step Breakdown

1. Introduction to Docker

We start with the fundamentals. You’ll learn:

What Docker is and why it’s useful

The difference between containers and virtual machines

Key Docker components: Docker Engine, Docker Hub, Dockerfile, Docker Compose

2. Installing Docker

Next, we guide you through installing Docker on:

Windows

macOS

Linux

You’ll set up Docker Desktop or Docker CLI and run your first container using the hello-world image.

3. Working with Docker Images and Containers

You’ll explore:

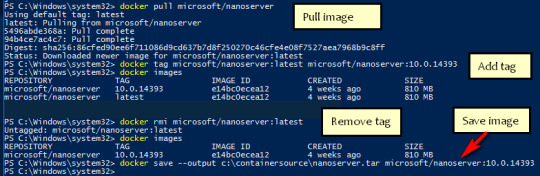

How to pull images from Docker Hub

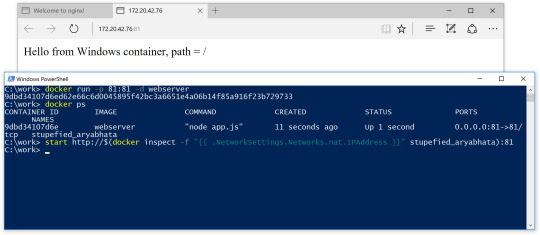

How to run containers using docker run

Inspecting containers with docker ps, docker inspect, and docker logs

Stopping and removing containers

4. Building Custom Docker Images

You’ll learn how to:

Write a Dockerfile

Use docker build to create a custom image

Add dependencies and environment variables

Optimize Docker images for performance

5. Docker Volumes and Networking

Understand how to:

Use volumes to persist data outside containers

Create custom networks for container communication

Link multiple containers (e.g., a Node.js app with a MongoDB container)

6. Docker Compose (Bonus Section)

Docker Compose lets you define multi-container applications. You’ll learn how to:

Write a docker-compose.yml file

Start multiple services with a single command

Manage application stacks easily

Real-World Examples Included

Throughout the tutorial, we use real-world examples to reinforce each concept. You’ll deploy a simple web application using Docker, connect it to a database, and scale services with Docker Compose.

Example Projects:

Dockerizing a static HTML website

Creating a REST API with Node.js and Express inside a container

Running a MySQL or MongoDB database container

Building a full-stack web app with Docker Compose

Best Practices and Tips

As you progress, you’ll also learn:

Naming conventions for containers and images

How to clean up unused images and containers

Tagging and pushing images to Docker Hub

Security basics when using Docker in production

What’s Next After This Tutorial?

After completing this Docker tutorial, you’ll be well-equipped to:

Use Docker in personal or professional projects

Learn Kubernetes and container orchestration

Apply Docker in CI/CD pipelines

Deploy containers to cloud platforms

Conclusion

Docker is an essential tool in the modern developer's toolbox. By learning Docker step by step in this beginner-friendly tutorial, you’ll gain the skills and confidence to build, deploy, and manage applications efficiently and consistently across different environments.

Whether you’re building simple web apps or complex microservices, Docker provides the flexibility, speed, and scalability needed for success. So dive in, follow along with the hands-on examples, and start your journey to mastering containerization with Docker tpoint-tech!

0 notes

Text

MEAN Stack Development: 15 tools to use for your project

Introduction

The MEAN stack involves MongoDB, Express.js, Angular, and Node.js; this is a powerful framework designed to build dynamic web applications. To handle the fast-evolving landscape of web development, using various tools shall be necessary to help developers be more productive, improve their workflows, and ensure quality code.

These tools are discussed in detail below, along with their current pricing.

15 tools for MEAN Stack development

1. Visual Studio Code (VSCode)

What gives Visual Studio Code such popularity among developers is its lightness and rich feature sets.

These are some of them.

IntelliSense: Provides intelligent completions depending on the type of variable, function definition, and modules imported.

Terminal: It lets developers run commands directly from the editor.

Extensions: There is a whole library of extensions tailored to JavaScript frameworks and that enhance functionality with tools for debugging, linting, and version control.

Price: Free

2. Postman

Postman is an essential tool for API development and testing.

Key Features:

Sending Requests: You can send HTTP requests to your Express.js backend with ease.

Inspecting Responses: See responses from your backend server in various formats from JSON to XML and make sure that everything works like you expect it should.

Automated Tests: Grab test scripts that auto-run any time a request is sent to prove that the functionality of your API works.

Pricing: Offers free plan; paid plans $12/user/month as a feature for larger features.

3. MongoDB Compass

MongoDB Compass: A graphical user interface for database administration on MongoDB.

Key Features:

Visual Data Exploration: It is intuitive to navigate the collections and documents.

Query Performance Insights: Direct analysis of query performance and optimizes the indexes from the interface

Schema Visualization: Visualize the structure of your data, which goes a long way in helping design better databases

Price: It's free of cost as well.

4. Angular CLI

Angular Command Line Interface is a powerful tool that streamlines development for Angular applications.

Main Features:

Project Scaffolding: Bootstrap new projects with a structured structure in a streamlined process.

Code Generation: Generate most of the components, services, modules, and more to delete boiler-plate code automatically.

Build Optimisation: You can easily build and deploy apps with automatic production optimisations.

Pricing: Free

5. Node.js Package Manager (npm)

npm is the default package manager for Node.js and any project will have to depend on it.

Key Features:

Dependency Management: Installation, update, and maintenance of third-party libraries and modules.

Custom Scripts: Run tests or build your application directly from scripts in the package.json file.

Version Control: Keep track of versions of packages to ensure compatibility on different environments

Pricing: Free

6. Git and GitHub

Version control is an important part of collaborative software development

Main Features:

Tracking changes made to the codebase

Bringing features into your codebase independently of other changes without having an impact on the main codebase until when you are ready to merge.

Collaboration Tools: Simplify team collaboration with pull requests, code review, and issue tracking.

Pricing: Git is free; GitHub offers free accounts with paid plans starting at $4/user/month for extra features.

7. Docker

Docker enables programmers to develop applications as containers that run consistently in different environments.

Key Features:

Environment Consistency: Applications will work similarly on development, testing, and even on deployment environments.

Isolation of Dependencies : Every application runs inside its own container without conflicting dependencies.

Simplified Deployment: Deploy applications quickly by packaging them with all necessary dependencies.

Pricing: Free tier available; paid plans starting at $5/month for extra features.

8. Webpack

Webpack is a module bundler that optimizes JavaScript files for production use.

Key Features:

Code Splitting: Take big codebases and break them into smaller chunks to load only on demand, improving performance.

Asset Management: Manage stylesheets, images, and other assets alongside your JavaScript files.

Hot Module Replacement (HMR): This module update can take place live with no need to entirely refresh the page when building.

Pricing: It is free.

9. MochaJS

MochaJS is an adaptive testing framework for Node.js application that is specifically designed for asynchronous testing.

Main Features:

Test Suite Management: Organize tests in suites for easy management

Rich Reporting: Generate detailed report on results from the test

Comes with a full suite of assertion libraries; Chai or SinonJS can couple well with it to be able to increase the capabilities of the test.

Pricing: Free

10. Chai

As an assertion library widely used in conjunction with MochaJS, Chai provides:

Key Features

Flexible Assertion Styles: Multiple styles can be used with developers (should, expect, assert).

Plugins Support: Extend functionality with plugins, for example, Chai-as-promised for promise testing or Chai-http for HTTP assertions.

Pricing: Free.

11. ESLint

To ensure code quality and consistency between projects, ESLint-the static code analysis tool-is a must among the list:

Key Features

Linting Rules Configuration: Configure rules according to team standards or needs of a project.

Real-time Feedback:Identify issues as you code in your editor.

Integration with CI/CD Pipelines: Prevent merging of low-quality code into the main branch with automated checks.

Pricing: Free.

12. Swagger

Use Swagger (OpenAPI) for API documentation and testing with these core features:

Automated generation of interactive documentation: Create interactive API documentation directly from annotations in your code.

Testing interface for APIs: Test endpoints directly from the documentation interface.

Client SDK Generation: Generates client libraries in languages of their choice based on the API spec.

Pricing: The free tier; paid plans starting at $75/mo for advanced features.

13. PM2

It is a process manager for Node.js applications. It provides the following key features:

Process Monitoring and Management: Application processes are automatically restarted in case they crash for continuous running.

Load Balancing Support: Incoming traffic is spread out over a number of instances of an application.

Log Management Features: Collates all logs from different instances into one page.

Pricing: Free; paid plans start at $15/month for additional features.

14. Figma

Utilizing Figma for design collaboration in MEAN stack projects encompasses:

Key Features:

Collaborative Features in Real-Time: It allows designers and developers to collaborate on UI/UX designs.

Design Prototyping: Interactive prototypes can be created that may be shared for feedback purposes prior to actual implementation.

Pricing: Offers a free plan; paid plans from $12/user/month for access to more advanced functionalities.

15. Robo 3T

Other Gui tools for the management of MongoDB include Robo 3T:

Key Features:

User-friendly Interface: Makes interactions with the MongoDB database easier with an intuitive Graphical interface.

Query Building Tools: Features visual query builders that make even complex queries easier to create and are much easier, regardless of knowing the command line.

Pricing: Free

Best Practices for MEAN Stack Development

In addition to utilizing essential tools, following best practices can significantly enhance your MEAN stack development process. Below are some key practices every developer should consider:

Modular Architecture Break down applications into smaller, reusable modules or components. Benefits: Enhances maintainability and reusability of code, making it easier to manage and test.

Environment Configuration Use environment variables to manage configuration settings for different environments (development, testing, production). Benefits: Improves security and flexibility by keeping sensitive information out of the codebase.

Version Control Utilize Git for version control to track changes and collaborate effectively. Benefits: Facilitates collaboration, allows rollback of changes, and maintains a history of the project.

Code Reviews Implement regular code reviews within the team to ensure code quality and adherence to standards. Benefits: Helps catch bugs early, promotes knowledge sharing, and maintains coding standards.

Automated Testing Write unit tests and integration tests using frameworks like Mocha and Chai. Benefits: Ensures code reliability and reduces the likelihood of introducing bugs during development.

API Documentation Use tools like Swagger to document APIs clearly and interactively. Benefits: Enhances collaboration between frontend and backend teams and serves as a reference for users.

Error Handling Implement comprehensive error handling throughout the application. Benefits: Improves user experience by providing informative error messages and prevents application crashes.

Performance Optimization Monitor application performance and optimize database queries and server response times. Benefits: Enhances user experience by reducing load times and improving responsiveness.

Security Best Practices Follow security best practices such as input validation, sanitization, and using HTTPS. Benefits: Protects applications from common vulnerabilities like SQL injection and XSS attacks.

Continuous Integration/Deployment (CI/CD) Implement CI/CD pipelines using tools like Jenkins or GitHub Actions for automated testing and deployment. Benefits: Streamlines the deployment process, reduces manual errors, and ensures consistent delivery of updates.

How can Acquaint Softtech help?

Acquaint Softtech is an outsourcing IT company, offering two services: software development outsourcing and IT staff augmentation. We are proud of developing new applications within the framework of Laravel, since we are an official Laravel partner.

The best option to hire remote developers for your company is Acquaint Softtech. With the help of our accelerated onboarding procedure, developers become a part of your current team in 48 hours at most.

We are also your best bet for any outsourced software development work because of our $15 hourly fee. To fulfill your requirement for specialist development, we can assist you in hiring remote developers, hiring MEAN stack developers, hiring MERN stack developers, and outsourced development services. Now let's collaborate to grow your company to new heights.

In addition, Acquaint softtech provides Custom Software Development services and On-demand app development services.

Wrapping Up!

Using these tools with best practices in your development will greatly enhance the productivity of the developers, intra-team coordination, and quality of the code being delivered. Leverage this affordably available resource, most of them free to keep your developers up-to-date while easily adapting to changes in project requirements to end up building a robust MEAN stack web application. You are welcome to customize each segment!

0 notes

Text

Ansible and Docker: Automating Container Management

In today's fast-paced tech environment, containerization and automation are key to maintaining efficient, scalable, and reliable infrastructure. Two powerful tools that have become essential in this space are Ansible and Docker. While Docker enables you to create, deploy, and run applications in containers, Ansible provides a simple yet powerful automation engine to manage and orchestrate these containers. In this blog post, we'll explore how to use Ansible to automate Docker container management, including deployment and orchestration.

Why Combine Ansible and Docker?

Combining Ansible and Docker offers several benefits:

Consistency and Reliability: Automating Docker container management with Ansible ensures consistent and reliable deployments across different environments.

Simplified Management: Ansible’s easy-to-read YAML playbooks make it straightforward to manage Docker containers, even at scale.

Infrastructure as Code (IaC): By treating your infrastructure as code, you can version control, review, and track changes over time.

Scalability: Automation allows you to easily scale your containerized applications by managing multiple containers across multiple hosts seamlessly.

Getting Started with Ansible and Docker

To get started, ensure you have Ansible and Docker installed on your system. You can install Ansible using pip: pip install ansible

And Docker by following the official Docker installation guide for your operating system.

Next, you'll need to set up an Ansible playbook to manage Docker. Here’s a simple example:

Example Playbook: Deploying a Docker Container

Create a file named deploy_docker.yml:

---

- name: Deploy a Docker container

hosts: localhost

tasks:

- name: Ensure Docker is installed

apt:

name: docker.io

state: present

become: yes

- name: Start Docker service

service:

name: docker

state: started

enabled: yes

become: yes

- name: Pull the latest nginx image

docker_image:

name: nginx

tag: latest

source: pull

- name: Run a Docker container

docker_container:

name: nginx

image: nginx

state: started

ports:

- "80:80"

In this playbook:

We ensure Docker is installed and running.

We pull the latest nginx Docker image.

We start a Docker container with the nginx image, mapping port 80 on the host to port 80 on the container.

Automating Docker Orchestration

For more complex scenarios, such as orchestrating multiple containers, you can extend your playbook. Here’s an example of orchestrating a simple web application stack with Nginx, a Node.js application, and a MongoDB database:

---

- name: Orchestrate web application stack

hosts: localhost

tasks:

- name: Ensure Docker is installed

apt:

name: docker.io

state: present

become: yes

- name: Start Docker service

service:

name: docker

state: started

enabled: yes

become: yes

- name: Pull necessary Docker images

docker_image:

name: "{{ item }}"

tag: latest

source: pull

loop:

- nginx

- node

- mongo

- name: Run MongoDB container

docker_container:

name: mongo

image: mongo

state: started

ports:

- "27017:27017"

- name: Run Node.js application container

docker_container:

name: node_app

image: node

state: started

volumes:

- ./app:/usr/src/app

working_dir: /usr/src/app

command: "node app.js"

links:

- mongo

- name: Run Nginx container

docker_container:

name: nginx

image: nginx

state: started

ports:

- "80:80"

volumes:

- ./nginx.conf:/etc/nginx/nginx.conf

links:

- node_app

Conclusion

By integrating Ansible with Docker, you can streamline and automate your container management processes, making your infrastructure more consistent, scalable, and reliable. This combination allows you to focus more on developing and less on managing infrastructure. Whether you're managing a single container or orchestrating a complex multi-container environment, Ansible and Docker together provide a powerful toolkit for modern DevOps practices.

Give it a try and see how much time and effort you can save by automating your Docker container management with Ansible!

For more details click www.qcsdclabs.com

#redhatcourses#information technology#containerorchestration#container#linux#docker#kubernetes#containersecurity#dockerswarm#aws

0 notes

Text

Graylog Docker Compose Setup: An Open Source Syslog Server for Home Labs

Graylog Docker Compose Install: Open Source Syslog Server for Home #homelab GraylogInstallationGuide #DockerComposeOnUbuntu #GraylogRESTAPI #ElasticsearchAndGraylog #MongoDBWithGraylog #DockerComposeYmlConfiguration #GraylogDockerImage #Graylogdata

A really great open-source log management platform for both production and home lab environments is Graylog. Using Docker Compose, you can quickly launch and configure Graylog for a production or home lab Syslog. Using Docker Compose, you can create and configure all the containers needed, such as OpenSearch and MongoDB. Let’s look at this process. Table of contentsWhat is Graylog?Advantages of…

View On WordPress

#Docker Compose on Ubuntu#docker-compose.yml configuration#Elasticsearch and Graylog#Graylog data persistence#Graylog Docker image#Graylog installation guide#Graylog REST API#Graylog web interface setup#log management with Graylog#MongoDB with Graylog

0 notes

Text

How to Install MongoDB on Docker Container linux.

How to Install MongoDB on Docker Container linux.

Hi Guys! Hope you are doing well. Let’s Learn about “How to Install MongoDB on Docker Container Linux”. The Docker is an open source platform, where developers can package there application and run that application into the Docker Container. So It is PAAS (Platform as a Service), which uses a OS virtualisation to deliver software in packages called containers. The containers are the bundle of…

View On WordPress

#docker hub#install mongodb#install mongodb docker#install mongodb docker container#install mongodb docker image#install mongodb docker linux#install mongodb docker ubuntu#install mongodb on docker container#mongodb docker install#mongodb docker tutorial#run docker on mongodb container

0 notes

Photo

How to Build and Deploy a Web App With Buddy

Moving code from development to production doesn't have to be as error-prone and time-consuming as it often is. By using Buddy, a continuous integration and delivery tool that doubles up as a powerful automation platform, you can automate significant portions of your development workflow, including all your builds, tests, and deployments.

Unlike many other CI/CD tools, Buddy has a pleasant and intuitive user interface with a gentle learning curve. It also offers a large number of well-tested actions that help you perform common tasks such as compiling sources and transferring files.

In this tutorial, I'll show you how you can use Buddy to build, test, and deploy a Node.js app.

Prerequisites

To be able to follow along, you must have the following installed on your development server:

Node.js 10.16.3 or higher

MongoDB 4.0.10 or higher

Git 2.7.4 or higher

1. Setting Up a Node.js App

Before you dive into Buddy, of course, you'll need a web app you can build and deploy. If you have one already, feel free to skip to the next step.

If you don't have a Node.js app you can experiment with, you can create one quickly using a starter template. Using the popular Hackathon starter template is a good idea because it has all the characteristics of a typical Node.js app.

Fork the template on GitHub and use git to download the fork to your development environment.

git clone https://github.com/hathi11/hackathon-starter.git

It's worth noting that Buddy is used with a Git repository. It supports repositories hosted on GitHub, BitBucket, and other such popular Git hosts. Buddy also has a built-in Git hosting solution or you can just as easily use Buddy with your own private Git servers.

Once the clone's complete, use npm to install all the dependencies of the web app.

cd hackathon-starter/ npm install

At this point, you can run the app locally and explore it using your browser.

node app.js

Here's what the web app looks like:

2. Creating a Buddy Project

If you don't have a Buddy account already, now is a good time to create one. Buddy offers two premium tiers and a free tier, all of which are cloud based. The free tier, which gives you 1 GB of RAM and 2 virtual CPUs, will suffice for now.

Once you're logged in to your Buddy account, press the Create new project button to get started.

When prompted to select a Git hosting provider, choose GitHub and give Buddy access to your GitHub repositories.

You should now be able to see all your GitHub repositories on Buddy. Click on the hackathon-starter repository to start creating automations for it.

Note that Buddy automatically recognizes our Node.js app as an Express application. It's because our starter template uses the Express web app framework.

3. Creating a Pipeline

On Buddy, a pipeline is what allows you to orchestrate and run all your tasks. Whenever you need to automate something with Buddy, you either create a new pipeline for it or add it to an existing pipeline.

Click on the Add a new pipeline button to start creating your first pipeline. In the form shown next, give a name to the pipeline and choose On push as the trigger mode. As you may have guessed, choosing this mode means that the pipeline is executed as soon as you push your commits to GitHub.

The next step is to add actions to your pipeline. To help you get started, Buddy intelligently generates a list of actions that are most relevant to your project.

For now, choose the Node.js action, which loads a Docker container that has Node.js installed on it. We'll be using this action to build our web app and run all its tests. So, on the next screen, go ahead and type in the following commands:

npm install npm test

4. Attaching a Service

Our web app uses MongoDB as its database. If it fails to establish a connection to a MongoDB server on startup, it will exit with an error. Therefore, our Docker container on Buddy must have access to a MongoDB server.

Buddy allows you to easily attach a wide variety of databases and other services to its Docker containers. To attach a MongoDB server, switch to the Services tab and select MongoDB. In the form shown next, you'll be able to specify details such as the hostname, port, and MongoDB version you prefer.

Make a note of the details you enter and press the Save this action button.

Next, you must configure the web app to use the URI of Buddy's MongoDB server. To do so, you can either change the value of the MONGODB_URI field in the .env.example file, or you can use an environment variable on Buddy. For now, let's go ahead with the latter option.

So switch to the Variables tab and press the Add a new variable button. In the dialog that pops up, set the Key field to MONGODB_URI and the Value field to a valid MongoDB connection string that's based on the hostname you chose earlier. Then press the Create variable button.

The official documentation has a lot more information about using environment variables in a Buddy pipeline.

5. Running the Pipeline

Our pipeline is already runnable, even though it has only one action. To run it, press the Run pipeline button.

You will now be taken to a screen where you can monitor the progress of the pipeline in real time. Furthermore, you can press any of the Logs buttons (there's one for each action in the pipeline) to take a closer look at the actual output of the commands that are being executed.

You can, of course, also run the pipeline by pushing a commit to your GitHub repository. I suggest you make a few changes to the web app, such as changing its header by modifying the views/partials/header.pug file, and then run the following commands:

git add . git commit -m "changed the header" git push origin master

When the last command has finished, you should be able to see a new execution of the pipeline start automatically.

6. Moving Files

When a build is successful and all the tests have passed, you'd usually want to move your code to production. Buddy has predefined actions that help you securely transfer files to several popular hosting solutions, such as the Google Cloud Platform, DigitalOcean, and Amazon Web Services. Furthermore, if you prefer using your own private server that runs SFTP or FTP, Buddy can directly use those protocols too.

In this tutorial, we'll be using a Google Compute Engine instance, which is nothing but a virtual machine hosted on Google's cloud, as our production server. So switch to the Actions tab of the pipeline and press the + button shown below the Build and test action to add a new action.

On the next screen, scroll down to the Google Cloud Platform section and select the Compute Engine option.

In the form that pops up, you must specify the IP address of your VM. Additionally, to allow Buddy to connect to the VM, you must provide a username and choose an authentication mode.

The easiest authentication mode in my opinion is Buddy's SSH key. When you choose this mode, Buddy will display an RSA public key that you can simply add to your VM's list of authorized keys.

To make sure that the credentials you entered are valid, you can now press the Test action button. If there are no errors, you should see a test log that looks like this:

Next, choose GitHub repository as the source of the files and use the Remote path field to specify the destination directory on the Google Cloud VM. The Browse button lets you browse through the filesystem of the VM and select the right directory.

Finally, press the Add this action button.

7. Using SSH

Once you've copied the code to your production server, you must again build and install all its dependencies there. You must also restart the web app for the code changes to take effect. To perform such varied tasks, you'll need a shell. The SSH action gives you one, so add it as the last action of your pipeline.

In the form that pops up, you must again specify your VM's IP address and login credentials. Then, you can type in the commands you want to run. Here's a quick way to install the dependencies and restart the Node.js server:

pkill -HUP node #stop node server cd my_project npm install #install dependencies export MONGODB_URI= nohup node app.js > /dev/null 2>&1 & #start node server

As shown in the Bash code above, you must reset the MONGODB_URI environment variable. This is to make sure that your production server connects to its own MongoDB instance, instead of Buddy's MongoDB service.

Press the Add this action button again to update the pipeline.

At this point, the pipeline has three actions that run sequentially. It should look like this:

Press the Run pipeline button to start it. If there are no errors, it should take Buddy only a minute or two to build, test, and deploy your Node.js web app to your Google Cloud VM.

Conclusion

Being able to instantly publish new features, bug fixes, and enhancements to your web apps gives you a definite edge over your competition. In this tutorial, you learned how to use Buddy's pipelines, predefined actions, and attachable services to automate and speed up common tasks such as building, testing, and deploying Node.js applications.

There's a lot more the Buddy platform can do. To learn more about it, do refer to its extensive documentation.

by Ashraff Hathibelagal via Envato Tuts+ Code https://ift.tt/33rH96G

1 note

·

View note

Text

MongoDB backup to S3 on Kubernetes- Alt Digital Technologies

Introduction

Kubernetes CronJob makes it very easy to run Jobs on a time-based schedule. These automated jobs run like Cron tasks on a Linux or UNIX system.

In this post, we’ll make use of Kubernetes CronJob to schedule a recurring backup of the MongoDB database and upload the backup archive to AWS S3.

There are several ways of achieving this, but then again, I had to stick to one using Kubernetes since I already have a Kubernetes cluster running.

Prerequisites:

Docker installed on your machine

Container repository (Docker Hub, Google Container Registry, etc) – I’ve used docker hub

Kubernetes cluster running

Steps to achieve this:

MongoDB installed on the server and running or MongoDB Atlas – I’ve used Atlas

AWS CLI installed in a docker container

A bash script will be run on the server to backup the database

AWS S3 Bucket configured

Build and deploy on Kubernetes

MongoDB Setup:

You can set up a mongo database on your server or use a MongoDB Atlas cluster instead. The Atlas cluster is a great way to set up a mongo database and is free for M0 clusters. You can also use a mongo database on your server or on a Kubernetes cluster.

After creating your MongoDB instance, we will need the Connection String. Please keep it safe somewhere, we will need it later. Choosing a connection string may confuse which one to pick. So we need to select the MongoDB Compass one that looks in the below format. Read more!!

0 notes

Text

Welcome to a special Monthly Moo

Product Edition. We have so much to share that we needed to create a special edition of the Monthly Moo to cover all the latest features that are now available. And to see these in action, sign up for our webinar that's coming on Tuesday. More details below.

With many new features recently rolled out we need to group them in logical order to cover each of the following categories:

Correlation Workflow Automation Collector Collaboration Administration Correlation Incident Origin - At a glance, Moogsoft now displays which correlation was used to create the incident. Easily click on the link within the incident and navigate to the correlation definition used to correlate all the alerts into one actionable and insightful incident.

incident origin of Moogsoft software dashboard incident origin with correlation definition

Correlation Containers - Moogsoft allows Correlation definitions to be processed in a pre-defined order. This unique capability provides additional logic and granularity for single matching as well as alerts belonging to one or more incidents. Users have the ability to group Correlation definitions into Correlation containers for prioritization and ordering.

Correlation Preview - Allowing you to Preview the results of a Correlation before enabling is Moo world order now available. As you define the Correlation definition, you can now preview the alerts to be included for processing to ensure your desired outcomes are met. Don’t like what you see? No harm, just change your filters and hit the Scope Preview button.

scope preview of a Correlation

Workflow Automation Workflow Preview - The Workflow Engine provides a no code/low code interface, by using an intuitive drag and drop technique to build and deploy both simple and complex workflows. Understanding what triggers each workflow is extremely important for deploying new workflows right, the first time.

event workflow

Collector Advanced Configuration - The Moogsoft Collector is a branch of the Vector open source code owned by Datadog. Moogsoft develops Plugins for various data sources, such as HTTP, MongoDB, SystemOS, Docker and many others. This new feature provides the best of both worlds, allowing users to configure Moogsoft Plugins as well as Vector sources.

Moogsoft Collector preview

Moogsoft Collector preview

Windows Supported - The Moogsoft Collector now supports the Windows Operating System. The installation is very easy and requires users to run a simple MSI executable and follow the guided instructions.

Moogsoft Collector preview

Collaboration Microsoft Teams logo

MS Teams - Bi-directional integration between Moogsoft and Microsoft Teams is now available. Simply configure the Outbound webhook in Moogsoft and install the package for MS Teams, and watch your teams collaborate around solving issues faster than ever before.

Microsoft Teams collaboration dashboard

Zoom - Automatically start a Zoom meeting based on certain criteria of an incident. Instantly start or schedule for a future time and day.

Confluence - Automatically create a document in Confluence for Post Mortems and Retrospectives.

xMatters - Bi-directional integration for oncall alert notification and escalation with xMatters. Users can choose to update the incidents either in Moogsoft or xMatters mobile device or UI.

Webex Teams - Easily configure Moogsoft to post in different Webex Team Rooms. Each Webex Team Space can have an Incoming Webhook that can be configured from the Webex App Hub.

Administration Custom Roles - The standard roles of Owner, Administrator and Operator have now been extended to include any number of custom roles. Simply create a new role and define the required permissions. These can be used with your SSO deployment.

Custom roles for each Moogsoft user

Moogsoft in the News Check out the recap:

Continuous Availability vs. Continuous Change - Read how to limit customer impact during cloud adoption - whether cloud migration for the first time, hybrid cloud adoption, or extending cloud-native with newer microservice architecture.

More Tools + More People = Increased Complexity - Consider what happens if digital apps or services go down. Companies lose revenue, decrease productivity, compromise customer loyalty and the list of repercussions goes on, depending on the business. Read how to ensure continuous availability.

Upcoming Events May Monthly Moo: Product Edition: To see these updates in action, sign up for the webinar here!

Subscribe to newsletter to make sure you receive the latest updates

Moogsoft is the AI-driven observability leader that provides intelligent monitoring solutions for smart DevOps. Moogsoft delivers the most advanced cloud-native, self-service platform for software engineers, developers and operators to instantly see everything, know what’s wrong and fix things faster.

0 notes

Text

Spring Boot Microservices + MongoDB in Docker Containers | Step by step tutorial for Beginners

Full Video Link: https://youtu.be/qWzBUwKiCpM Hi, a new #video on step by step #tutorial for #springboot #microservices running in #docker #container with #mongodb also running in #docker #container is published on #codeonedigest #youtube channel. Easy

MongoDB is an open-source document database and leading NoSQL database. MongoDB is written in C++. This video will give you complete understanding of running the MongoDB in docker container. MongoDB works on concept of collection and document. MongoDB is a cross-platform, document-oriented database that provides, high performance, high availability, and easy scalability. Mongo Database –…

View On WordPress

#compass#container#docker#docker container#docker file#docker full course#docker image#docker tutorial#docker tutorial for beginners#microservices#microservices mongodb#mongo db#mongo dockerfile#mongodb#mongodb compass#mongodb configuration#mongodb configuration file#mongodb connection error#mongodb docker compose#mongodb docker install#mongodb docker setup#mongodb docker tutorial#mongodb docker volume#mongodb installation#Mongodb java#mongodb microservices example#mongodb tutorial#mongodb tutorial for beginners#monogodb tutorial#Spring boot

0 notes

Text

In this article we provide the steps for installing UniFi Network Application / UniFi Controller on Ubuntu 18.04 / Debian 9 Linux system. Ubiquiti offers a wide range of Access Points, Switches, Firewall devices, Routers, Cameras, among many other appliances which are managed from a single point. The commonly used management interface is provided by UniFi Dream Machine Pro. The UniFi Network Application (formerly UniFi Controller), is a wireless network management software solution from Ubiquiti Networks™. This tools provides the capability to manage multiple UniFI networks devices from a web browser. UniFi Network Application can be installed on Windows, macOS and Linux operating systems. In the guide that we did earlier, we covered installation process on macOS: Install UniFi Network Application on macOS For running in Docker see guide in the link below: How To Run UniFi Controller in Docker Container Below are the installation requirements for UniFi Network Application; A DHCP-enabled network Linux, Mac OS X, or Microsoft Windows 7/8 – Running controller software. Java Runtime Environment 8 Web Browser: Mozilla Firefox, Google Chrome, or Microsoft Internet Explorer 8 (or above) For UniFi Network Application installation on Linux, supported operating systems as of this article update are; Ubuntu 18.04 and 16.04 Debian 9 / Debian 8 Software versions requirements: Java 8 (My test with Java 17 and Java 11 failed). MongoDB =3.6 (We’ll install MongoDB 4.0) Before you proceed further query OS details through contents in /etc/os-release file to ensure OS version requirement is met. $ cat /etc/os-release NAME="Ubuntu" VERSION="18.04.6 LTS (Bionic Beaver)" ID=ubuntu ID_LIKE=debian PRETTY_NAME="Ubuntu 18.04.6 LTS" VERSION_ID="18.04" HOME_URL="https://www.ubuntu.com/" SUPPORT_URL="https://help.ubuntu.com/" BUG_REPORT_URL="https://bugs.launchpad.net/ubuntu/" PRIVACY_POLICY_URL="https://www.ubuntu.com/legal/terms-and-policies/privacy-policy" VERSION_CODENAME=bionic UBUNTU_CODENAME=bionic From the output we can see this installation is on Ubuntu 18.04 (Bionic Beaver), which is supported. Add UniFi and MongoDB APT repositories It’s always a good recommendation to keep your system updated. Run the commands below to update your OS. sudo apt update && sudo apt -y full-upgrade After the update perform a reboot if it’s required. [ -f /var/run/reboot-required ] && sudo reboot -f Install software packages required to configure UniFi and MongoDB APT repositories. sudo apt install curl gpg gnupg2 software-properties-common apt-transport-https lsb-release ca-certificates Add UniFi APT repository Import repository GPG key used in signing UniFi APT packages. sudo wget -O /etc/apt/trusted.gpg.d/unifi-repo.gpg https://dl.ui.com/unifi/unifi-repo.gpg Add UniFi APT repository by executing commands below in your terminal. echo 'deb https://www.ui.com/downloads/unifi/debian stable ubiquiti' | sudo tee /etc/apt/sources.list.d/ubnt-unifi.list Add MongoDB APT repository Start by adding GPG key to your system keyring. wget -qO - https://www.mongodb.org/static/pgp/server-4.0.asc | sudo apt-key add - You should get a message in the output that says “OK” if this was successful. Next add repository to your system. ### Ubuntu 18.04 ### echo "deb https://repo.mongodb.org/apt/ubuntu bionic/mongodb-org/4.0 multiverse" | sudo tee /etc/apt/sources.list.d/mongodb-org-4.0.list ### Debian 9 ### echo "deb https://repo.mongodb.org/apt/debian stretch/mongodb-org/4.0 multiverse" | sudo tee /etc/apt/sources.list.d/mongodb-org-4.0.list Once all the repositories have beed added, test if they are functional. ### Ubuntu 18.04 ### $ sudo apt update Get:1 http://mirrors.digitalocean.com/ubuntu bionic InRelease [242 kB] Ign:2 https://repo.mongodb.org/apt/ubuntu bionic/mongodb-org/4.0 InRelease Hit:3 https://repos-droplet.digitalocean.com/apt/droplet-agent main InRelease Get:4 https://repo.mongodb.org/apt/ubuntu bionic/mongodb-org/4.0 Release [2989 B]

Hit:6 http://mirrors.digitalocean.com/ubuntu bionic-updates InRelease Hit:7 http://security.ubuntu.com/ubuntu bionic-security InRelease Get:8 https://repo.mongodb.org/apt/ubuntu bionic/mongodb-org/4.0 Release.gpg [801 B] Hit:9 http://mirrors.digitalocean.com/ubuntu bionic-backports InRelease Get:5 https://dl.ubnt.com/unifi/debian stable InRelease [3038 B] Get:10 https://repo.mongodb.org/apt/ubuntu bionic/mongodb-org/4.0/multiverse amd64 Packages [18.4 kB] Get:11 https://dl.ubnt.com/unifi/debian stable/ubiquiti amd64 Packages [732 B] Fetched 268 kB in 1s (319 kB/s) Reading package lists... Done Building dependency tree Reading state information... Done ### Debian 9 ### $ sudo apt update Hit:1 http://security.debian.org stretch/updates InRelease Ign:2 http://mirrors.digitalocean.com/debian stretch InRelease Hit:3 http://mirrors.digitalocean.com/debian stretch-updates InRelease Hit:4 http://mirrors.digitalocean.com/debian stretch Release Ign:5 https://repo.mongodb.org/apt/debian stretch/mongodb-org/4.0 InRelease Hit:6 https://repos-droplet.digitalocean.com/apt/droplet-agent main InRelease Get:8 https://repo.mongodb.org/apt/debian stretch/mongodb-org/4.0 Release [1490 B] Get:9 https://repo.mongodb.org/apt/debian stretch/mongodb-org/4.0 Release.gpg [801 B] Get:7 https://dl.ubnt.com/unifi/debian stable InRelease [3038 B] Get:11 https://dl.ubnt.com/unifi/debian stable/ubiquiti amd64 Packages [732 B] Fetched 6061 B in 1s (5707 B/s) Reading package lists... Done Building dependency tree Reading state information... Done Install Java 8 on Ubuntu 18.04 / Debian 9 Restrict Ubuntu and your Debian system from automatically installing Java 11 / Java 17: sudo apt-mark hold openjdk-11-* sudo apt-mark hold openjdk-17-* Install Java 8 from OS default APT repositories. sudo apt install openjdk-8-jdk openjdk-8-jre Remove any newer version of Java installed – Java 11 or Java 17. sudo apt remove openjdk-11-* openjdk-17-* sudo apt install openjdk-8-jdk openjdk-8-jre Confirm installed Java version with the command java -version , it should show openjdk 1.8 $ java -version openjdk version "1.8.0_312" OpenJDK Runtime Environment (build 1.8.0_312-8u312-b07-0ubuntu1~18.04-b07) OpenJDK 64-Bit Server VM (build 25.312-b07, mixed mode) Install UniFi Network Application on Ubuntu 18.04 / Debian 9 We can now install UniFi Network Application on Ubuntu 18.04 / Debian 9 once Java 8 is confirmed to be the default Java version in the system. Run the commands below to install the latest release of UniFi Network Application (UniFi Controller). sudo apt install unifi Accept installation prompt as requested. Reading package lists... Done Building dependency tree Reading state information... Done The following additional packages will be installed: binutils binutils-common binutils-x86-64-linux-gnu ca-certificates-java fontconfig-config fonts-dejavu-core java-common jsvc libasound2 libasound2-data libavahi-client3 libavahi-common-data libavahi-common3 libbinutils libboost-filesystem1.65.1 libboost-iostreams1.65.1 libboost-program-options1.65.1 libboost-system1.65.1 libcommons-daemon-java libcups2 libfontconfig1 libgoogle-perftools4 libgraphite2-3 libharfbuzz0b libjpeg-turbo8 libjpeg8 liblcms2-2 libnspr4 libnss3 libpcrecpp0v5 libpcsclite1 libsnappy1v5 libstemmer0d libtcmalloc-minimal4 libyaml-cpp0.5v5 mongo-tools mongodb-clients mongodb-server mongodb-server-core openjdk-17-jre-headless Suggested packages: binutils-doc default-jre libasound2-plugins alsa-utils java-virtual-machine cups-common liblcms2-utils pcscd libnss-mdns fonts-dejavu-extra fonts-ipafont-gothic fonts-ipafont-mincho fonts-wqy-microhei | fonts-wqy-zenhei fonts-indic The following NEW packages will be installed: binutils binutils-common binutils-x86-64-linux-gnu ca-certificates-java fontconfig-config fonts-dejavu-core java-common jsvc libasound2 libasound2-data libavahi-client3 libavahi-common-data libavahi-common3 libbinutils libboost-filesystem1.

65.1 libboost-iostreams1.65.1 libboost-program-options1.65.1 libboost-system1.65.1 libcommons-daemon-java libcups2 libfontconfig1 libgoogle-perftools4 libgraphite2-3 libharfbuzz0b libjpeg-turbo8 libjpeg8 liblcms2-2 libnspr4 libnss3 libpcrecpp0v5 libpcsclite1 libsnappy1v5 libstemmer0d libtcmalloc-minimal4 libyaml-cpp0.5v5 mongo-tools mongodb-clients mongodb-server mongodb-server-core openjdk-17-jre-headless unifi 0 upgraded, 41 newly installed, 0 to remove and 57 not upgraded. Need to get 280 MB of archives. After this operation, 724 MB of additional disk space will be used. Do you want to continue? [Y/n] y Manually installing UniFi Network Application on Ubuntu 18.04 / Debian 9 If you prefer to manually download a .deb package, visit the download the UniFi Controller software from the Ubiquiti Networks website. Choose “Debian / Ubuntu Linux and UniFi Cloud Key” from software list. Click the “Download” button that shows up after selecting. Use “Download File” button or copy Direct URL and use command line downloaders to get the file in your local system. Downloading the file with wget: wget https://dl.ui.com/unifi//unifi_sysvinit_all.deb Installation of .deb package can be done with apt while passing dowloaded file path as an argument. $ sudo apt install ./unifi_sysvinit_all.deb Reading package lists... Done Building dependency tree Reading state information... Done Note, selecting 'unifi' instead of './unifi_sysvinit_all.deb' The following additional packages will be installed: binutils binutils-common binutils-x86-64-linux-gnu ca-certificates-java fontconfig-config fonts-dejavu-core java-common jsvc libasound2 libasound2-data libavahi-client3 libavahi-common-data libavahi-common3 libbinutils libboost-filesystem1.65.1 libboost-iostreams1.65.1 libboost-program-options1.65.1 libboost-system1.65.1 libcommons-daemon-java libcups2 libfontconfig1 libgoogle-perftools4 libgraphite2-3 libharfbuzz0b libjpeg-turbo8 libjpeg8 liblcms2-2 libnspr4 libnss3 libpcrecpp0v5 libpcsclite1 libsnappy1v5 libstemmer0d libtcmalloc-minimal4 libyaml-cpp0.5v5 mongo-tools mongodb-clients mongodb-server mongodb-server-core openjdk-17-jre-headless Suggested packages: binutils-doc default-jre libasound2-plugins alsa-utils java-virtual-machine cups-common liblcms2-utils pcscd libnss-mdns fonts-dejavu-extra fonts-ipafont-gothic fonts-ipafont-mincho fonts-wqy-microhei | fonts-wqy-zenhei fonts-indic The following NEW packages will be installed: binutils binutils-common binutils-x86-64-linux-gnu ca-certificates-java fontconfig-config fonts-dejavu-core java-common jsvc libasound2 libasound2-data libavahi-client3 libavahi-common-data libavahi-common3 libbinutils libboost-filesystem1.65.1 libboost-iostreams1.65.1 libboost-program-options1.65.1 libboost-system1.65.1 libcommons-daemon-java libcups2 libfontconfig1 libgoogle-perftools4 libgraphite2-3 libharfbuzz0b libjpeg-turbo8 libjpeg8 liblcms2-2 libnspr4 libnss3 libpcrecpp0v5 libpcsclite1 libsnappy1v5 libstemmer0d libtcmalloc-minimal4 libyaml-cpp0.5v5 mongo-tools mongodb-clients mongodb-server mongodb-server-core openjdk-17-jre-headless unifi 0 upgraded, 41 newly installed, 0 to remove and 57 not upgraded. Need to get 280 MB of archives. After this operation, 724 MB of additional disk space will be used. Do you want to continue? [Y/n] y Successful installation output; Note, selecting 'unifi' instead of './unifi_sysvinit_all.deb' unifi is already the newest version (7.1.66-17875-1). 0 upgraded, 0 newly installed, 0 to remove and 57 not upgraded. Access UniFi Network Application on Web browser To restart the service run the following commands: sudo systemctl restart unifi.service Confirm that the status is running: $ systemctl status unifi.service ● unifi.service - unifi Loaded: loaded (/lib/systemd/system/unifi.service; enabled; vendor preset: enabled) Active: active (running) since Mon 2022-07-11 23:46:08 UTC; 18s ago Process: 12237 ExecStop=/usr/lib/unifi/bin/unifi.init stop (code=exited, status=0/SUCCESS)

Process: 12307 ExecStart=/usr/lib/unifi/bin/unifi.init start (code=exited, status=0/SUCCESS) Main PID: 12375 (jsvc) Tasks: 101 (limit: 2314) CGroup: /system.slice/unifi.service ├─12375 unifi -cwd /usr/lib/unifi -home /usr/lib/jvm/java-8-openjdk-amd64 -cp /usr/share/java/commons-daemon.jar:/usr/lib/unifi/lib/ace.jar -pidfile /var/run/unifi.pid -procname unifi -ou ├─12377 unifi -cwd /usr/lib/unifi -home /usr/lib/jvm/java-8-openjdk-amd64 -cp /usr/share/java/commons-daemon.jar:/usr/lib/unifi/lib/ace.jar -pidfile /var/run/unifi.pid -procname unifi -ou ├─12378 unifi -cwd /usr/lib/unifi -home /usr/lib/jvm/java-8-openjdk-amd64 -cp /usr/share/java/commons-daemon.jar:/usr/lib/unifi/lib/ace.jar -pidfile /var/run/unifi.pid -procname unifi -ou ├─12397 /usr/lib/jvm/java-8-openjdk-amd64/jre/bin/java -Dfile.encoding=UTF-8 -Djava.awt.headless=true -Dapple.awt.UIElement=true -Dunifi.core.enabled=false -Xmx1024M -XX:+ExitOnOutOfMemor └─12449 bin/mongod --dbpath /usr/lib/unifi/data/db --port 27117 --unixSocketPrefix /usr/lib/unifi/run --logRotate reopen --logappend --logpath /usr/lib/unifi/logs/mongod.log --pidfilepath Jul 11 23:45:51 unifi-controller systemd[1]: Stopped unifi. Jul 11 23:45:51 unifi-controller systemd[1]: Starting unifi... Jul 11 23:45:51 unifi-controller unifi.init[12307]: * Starting Ubiquiti UniFi Network application unifi Jul 11 23:46:08 unifi-controller unifi.init[12307]: ...done. Jul 11 23:46:08 unifi-controller systemd[1]: Started unifi. Services should be available on port 8080 and port 8443. jmutai@unifi-controller:~$ ss -tunelp | egrep '8080|8443' tcp LISTEN 0 100 *:8443 *:* uid:112 ino:47897 sk:a v6only:0 tcp LISTEN 0 100 *:8080 *:* uid:112 ino:47891 sk:e v6only:0 Access UniFi Network Application on a web browser using the server IP address an port 8443. https://172.20.30.20:8443/ You’ll get SSL warnings while trying to access the portal. Click “Advanced” and “Proceed” to the portal. From your clients (UniFi devices), ping UniFi controller IP address to validate network connectivity. U6-LR-BZ.6.0.21# ping 172.20.30.20 -c 2 PING 172.20.30.20 (172.20.30.20): 56 data bytes 64 bytes from 172.20.30.20: seq=0 ttl=63 time=0.883 ms 64 bytes from 172.20.30.20: seq=1 ttl=63 time=0.885 ms --- 172.20.30.20 ping statistics --- 2 packets transmitted, 2 packets received, 0% packet loss round-trip min/avg/max = 0.883/0.884/0.885 ms Pointing UniFi Devices to new Network Application (UniFi Controller) if this setup is new, your Network Application will discover all UniFi network devices in your network. Check out initial UniFi Network Application configuration in our recent macOS guide: Configure UniFi Network Application If you’re replacing an old Controller, then login to your UniFi devices and set inform address to the new server address and port. See below example. set-inform http://172.20.30.20:8080/inform Give it sometime and status should reflect the recent update we populated. US-16-150W-US.6.2.14# info Model: US-16-150W Version: 6.2.14.13855 MAC Address: 98:8a:20:fd:ea:94 IP Address: 192.168.1.116 Hostname: US-16-150W Uptime: 992330 seconds Status: Connected (http://172.20.30.20:8080/inform) Your uniFi devices will be available for administration from Web browser once they’re enrolled / imported for management via UniFi Network Application. Log Files Location UniFi Network Application have log files that are essential for any troubleshooting required. Logs files available are; /usr/lib/unifi/logs/server.log /usr/lib/unifi/logs/mongod.log We’re working on more articles around UniFi network infrastructure and other integrations. Stay tuned for updates.

0 notes

Text

Windows 10 home vs home n reddit 無料ダウンロード.Windows 10を無料で使う。プロダクトキーは必要なし!

Windows 10 home vs home n reddit 無料ダウンロード.N エディションのメディア機能Windows一覧

Proだけの機能を使うにはアップグレードが必要.Revo Uninstaller のダウンロードと使い方 - k本的に無料ソフト・フリーソフト

Windows 10 の N および KN エディションには Windows 10 とほぼ同じ機能が含まれていますが、メディア関連テクノロジ (Windows Media Player) と特定のプレインストールされたメディア アプリ (音楽、ビデオ、ボイス レコーダー、Skype) を除きます。 · Windows PCにMicrosoft Outlook をダウンロードしてインストールします。 あなたのコンピュータにMicrosoft Outlookをこのポストから無料でダウンロードしてインストールすることができます。PC上でMicrosoft Outlookを使うこの方法は、Windows 7/8 / / 10とすべてのMac OSで動作します。 Windows までは、プロダクトキーを入力しないと使うことができませんでした。. なんとWindows 10からは、プロダクトキーを入力しないで使うことが可能です。. 仮想環境を試したり、クローンを作って少しだけ動作確認をしたい場合等にも有効です

Windows 10 home vs home n reddit 無料ダウンロード.N エディションのメディア機能Windows一覧

· Windows 10には、家庭など一般用途の「Home」エディションと、主にビジネス用途の「Pro」エディションの2種類があります。この記事では、Windows 10 HomeのパソコンをWindows 10 Proにアップグレードする方法を詳しく解説します。Estimated Reading Time: 3 mins · 等々を備えています。. 「Revo Uninstaller」は、高機能なアンインストール支援ソフトです。. 指定したアプリケーションを、システムからきれいにアンインストールしてくれるクリーンアップツールで、アンインストールの際に. ソフトが、ハードディスクや Windows 10 の ISO ファイルをダウンロードするためにメディア作成ツールを使用した場合は、これらの手順に従う前に、ISO ファイルを DVD に書き込む必要があります。 Windows 10 をインストールする PC に、USB フラッシュ ドライブまたは DVD を挿入します。

本記事は「 Docker Advent Calendar 」の21日目のエントリとなります。 アドカレと関係なくブログに書こうと思ってましたが、丁度カレンダーが空いていたので滑り込みました。. Docker Desktop for Mac and Windows Docker. というか、昔 大体2年前くらい はHome Editionには Hyper-Vが使えず インストールできず、 WSL使ってもDocker Composeで難あり で、Docker Toolboxはサポート終了と八方ふさがりだったりしましたが、少し前にWSL2対応やHomeでもHyper-V対応などが進んでDocker Desktopが使えるようになりました。 以前苦戦して 結局VMのLinuxでDocker入れてそのまま 利用してる人 私 も、改めてWindowsへDockerをインストールし、VS CodeのRemote Container使った環境などを作っても良いと思います。.

よく、 PowerShellを使った設定やコントロールパネルの「Windowsの機能の有効化または無効化」 で「Hyper-Vを有効にする」「仮想マシンプラットフォームを有効にする」などの 事前準備が書かれた記事がありますが実は必要ない です。 Docker Desktopのインストーラが自動でやってくれます 。. これで終わりだったら本当に簡単すぎるのですが、WSL2のインストールで追加の作業が必要です。 Windowsが起動すると「WSL 2 installation is incomplete.

これでWSL2の準備も完了したので、最初に表示されていたダイアログの「Restart」を押下します。 このRestartはWindowsではなくDockerのプロセスが再起動されます。. hello worldコンテナ もあるけれど、この時期なので以下を実行してみましょう。 実行結果はぜひお手元の環境で試してみてください. あとは初期ユーザーを作成すればWikiにログインでき、初期設定 Wiki名やファイルアップロード設定 を行えば普通に利用できます。 ファイルアップロード設定は「MongoDB GridFS 」を選択すれば内部DB使ってファイルアップロードできるようになります. こちらもCtrl-cで停止しますが、Wikiのデータは停止しても残ります。 オプションの -d を追加して docker-compose up -d と実行すればバックグラウンドで実行するので、通常利用時はこちらが良いかもしれません。.

Docker DesktopをインストールしたPCに VS Code と Remote Development拡張 を入れれば、Remote Containersを使って「Windows上の指定ディレクトリをVolumeマウントしたコンテナ上でVS Codeのリモート実行」というイマドキのコンテナを使った開発・作業環境も作れます。 例えば左下の接続アイコン押下すると表示されるメニューで「Remote-Containers: Open Folderin Container Docker Composeを使ってデプロイしたコンテナがある場合は、Docker Desktopを起動したときに表示されるContainer Listでマウスカーソルを合わせると「Open in Visual Studio Code」というボタンが表示されます。.

このボタンを押下すると、このコンテナを起動したときに使ったCompose file docker-compose. yml ファイル のあるディレクトリをVS Codeで開いてくれます。. Windows 10 HomeへのDocker Desktopのインストールを行い、使用例としてコマンドラインでの docker および docker-compose と、VS CodeのRemote Containers機能について紹介しました。. Qiita Teams that are logged in. 最新CPaaSコミュニケーションAPIの比較記事を投稿して、最大10万円のAmazonギフト券を手に入れよう! 詳しくはこちら. Improve article. Report article. Help us understand the problem. What are the problem? It's violation of community guideline. It's illegal. It's socially inappropriate. It's spam.

Docker Advent Calendar Day zaki-lknr 株式会社エーピーコミュニケーションズ. posted at updated at Docker Windows10 docker-compose VSCode. 本記事は「 Docker Advent Calendar 」の21日目のエントリとなります。 アドカレと関係なくブログに書こうと思ってましたが、丁度カレンダーが空いていたので滑り込みました。 Docker Desktop for Mac and Windows Docker Windows 10 Home EditionもDockerのインストールがとても簡単になっていました。 Docker Composeも標準で使用できます。 というか、昔 大体2年前くらい はHome Editionには Hyper-Vが使えず インストールできず、 WSL使ってもDocker Composeで難あり で、Docker Toolboxはサポート終了と八方ふさがりだったりしましたが、少し前にWSL2対応やHomeでもHyper-V対応などが進んでDocker Desktopが使えるようになりました。 以前苦戦して 結局VMのLinuxでDocker入れてそのまま 利用してる人 私 も、改めてWindowsへDockerをインストールし、VS CodeのRemote Container使った環境などを作っても良いと思います。 環境 Windows 10 Home バージョン OSビルド exe」をダウンロードしておきます。 exeを実行します。 チェックはデフォルトのまま「OK」押下します。 ちなみにWindows 10 Proの場合はHyper-Vの有効化のチェックも表示されますが、デフォルトチェックのままでインストールの流れはHomeもProも同様です。 しばらく待てばインストールが完了します。 「Close and restart」押下するとWindows OSが再起動されます。 これで終わりだったら本当に簡単すぎるのですが、WSL2のインストールで追加の作業が必要です。 Windowsが起動すると「WSL 2 installation is incomplete.

You should just use require "express-validator" instead. By following users and tags, you can catch up information on technical fields that you are interested in as a whole. What you can do with signing up.

0 notes

Text

Install Docker on Linux and run a MongoDB Container.

Install Docker on Linux and run a MongoDB Container.

Hi hope you are doing well, lets learn about “How to Setup and Install Docker on Linux and Run a MongoDB Container”, the Docker is the fastest growing technology in the IT market. Many industries are moving towards docker from the normal EC2 instances. Docker is the container technology. It is PAAS (Platform as a Service), which uses a OS virtualisation to deliver software in packages called…

View On WordPress

0 notes

Text

Docker Commands Windows

Docker Commands Windows

Docker Commands Windows Server 2016

MongoDB document databases provide high availability and easy scalability. You do not need to push your certificates with git commands. When the Docker Desktop application starts, it copies the /.docker/certs.d folder on your Windows system to the /etc/docker/certs.d directory on Moby (the Docker Desktop virtual machine running on Hyper-V). Docker Desktop for Windows can’t route traffic to Linux containers. However, you can ping the Windows containers. Per-container IP addressing is not possible. The docker (Linux) bridge network is not reachable from the Windows host. However, it works with Windows containers. Use cases and workarounds.

Estimated reading time: 15 minutes

Welcome to Docker Desktop! The Docker Desktop for Windows user manual provides information on how to configure and manage your Docker Desktop settings.

For information about Docker Desktop download, system requirements, and installation instructions, see Install Docker Desktop.

Settings

The Docker Desktop menu allows you to configure your Docker settings such as installation, updates, version channels, Docker Hub login,and more.

This section explains the configuration options accessible from the Settings dialog.

Open the Docker Desktop menu by clicking the Docker icon in the Notifications area (or System tray):

Select Settings to open the Settings dialog:

General

On the General tab of the Settings dialog, you can configure when to start and update Docker.

Start Docker when you log in - Automatically start Docker Desktop upon Windows system login.

Expose daemon on tcp://localhost:2375 without TLS - Click this option to enable legacy clients to connect to the Docker daemon. You must use this option with caution as exposing the daemon without TLS can result in remote code execution attacks.

Send usage statistics - By default, Docker Desktop sends diagnostics,crash reports, and usage data. This information helps Docker improve andtroubleshoot the application. Clear the check box to opt out. Docker may periodically prompt you for more information.

Resources

The Resources tab allows you to configure CPU, memory, disk, proxies, network, and other resources. Different settings are available for configuration depending on whether you are using Linux containers in WSL 2 mode, Linux containers in Hyper-V mode, or Windows containers.

Advanced

Note

The Advanced tab is only available in Hyper-V mode, because in WSL 2 mode and Windows container mode these resources are managed by Windows. In WSL 2 mode, you can configure limits on the memory, CPU, and swap size allocatedto the WSL 2 utility VM.

Use the Advanced tab to limit resources available to Docker.

CPUs: By default, Docker Desktop is set to use half the number of processorsavailable on the host machine. To increase processing power, set this to ahigher number; to decrease, lower the number.

Memory: By default, Docker Desktop is set to use 2 GB runtime memory,allocated from the total available memory on your machine. To increase the RAM, set this to a higher number. To decrease it, lower the number.

Swap: Configure swap file size as needed. The default is 1 GB.

Disk image size: Specify the size of the disk image.

Disk image location: Specify the location of the Linux volume where containers and images are stored.

You can also move the disk image to a different location. If you attempt to move a disk image to a location that already has one, you get a prompt asking if you want to use the existing image or replace it.

Download Apple MacOS High Sierra for Mac to get a boost with new technologies in the latest Mac OS update coming fall 2017. Apple download sierra.

File sharing

Note

The File sharing tab is only available in Hyper-V mode, because in WSL 2 mode and Windows container mode all files are automatically shared by Windows.

Use File sharing to allow local directories on Windows to be shared with Linux containers.This is especially useful forediting source code in an IDE on the host while running and testing the code in a container.Note that configuring file sharing is not necessary for Windows containers, only Linux containers. If a directory is not shared with a Linux container you may get file not found or cannot start service errors at runtime. See Volume mounting requires shared folders for Linux containers.

File share settings are:

Add a Directory: Click + and navigate to the directory you want to add.

Apply & Restart makes the directory available to containers using Docker’sbind mount (-v) feature.

Tips on shared folders, permissions, and volume mounts

Share only the directories that you need with the container. File sharing introduces overhead as any changes to the files on the host need to be notified to the Linux VM. Sharing too many files can lead to high CPU load and slow filesystem performance.

Shared folders are designed to allow application code to be edited on the host while being executed in containers. For non-code items such as cache directories or databases, the performance will be much better if they are stored in the Linux VM, using a data volume (named volume) or data container.

Docker Desktop sets permissions to read/write/execute for users, groups and others 0777 or a+rwx.This is not configurable. See Permissions errors on data directories for shared volumes.

Windows presents a case-insensitive view of the filesystem to applications while Linux is case-sensitive. On Linux it is possible to create 2 separate files: test and Test, while on Windows these filenames would actually refer to the same underlying file. This can lead to problems where an app works correctly on a developer Windows machine (where the file contents are shared) but fails when run in Linux in production (where the file contents are distinct). To avoid this, Docker Desktop insists that all shared files are accessed as their original case. Therefore if a file is created called test, it must be opened as test. Attempts to open Test will fail with “No such file or directory”. Similarly once a file called test is created, attempts to create a second file called Test will fail.

Shared folders on demand

You can share a folder “on demand” the first time a particular folder is used by a container.

If you run a Docker command from a shell with a volume mount (as shown in theexample below) or kick off a Compose file that includes volume mounts, you get apopup asking if you want to share the specified folder.

You can select to Share it, in which case it is added your Docker Desktop Shared Folders list and available tocontainers. Alternatively, you can opt not to share it by selecting Cancel.

Proxies

Docker Desktop lets you configure HTTP/HTTPS Proxy Settings andautomatically propagates these to Docker. For example, if you set your proxysettings to http://proxy.example.com, Docker uses this proxy when pulling containers.

Your proxy settings, however, will not be propagated into the containers you start.If you wish to set the proxy settings for your containers, you need to defineenvironment variables for them, just like you would do on Linux, for example:

For more information on setting environment variables for running containers,see Set environment variables.

Network

Note

The Network tab is not available in Windows container mode because networking is managed by Windows.

You can configure Docker Desktop networking to work on a virtual private network (VPN). Specify a network address translation (NAT) prefix and subnet mask to enable Internet connectivity.

DNS Server: You can configure the DNS server to use dynamic or static IP addressing.

Note

Some users reported problems connecting to Docker Hub on Docker Desktop. This would manifest as an error when trying to rundocker commands that pull images from Docker Hub that are not alreadydownloaded, such as a first time run of docker run hello-world. If youencounter this, reset the DNS server to use the Google DNS fixed address:8.8.8.8. For more information, seeNetworking issues in Troubleshooting.

Updating these settings requires a reconfiguration and reboot of the Linux VM.

WSL Integration

In WSL 2 mode, you can configure which WSL 2 distributions will have the Docker WSL integration.

By default, the integration will be enabled on your default WSL distribution. To change your default WSL distro, run wsl --set-default <distro name>. (For example, to set Ubuntu as your default WSL distro, run wsl --set-default ubuntu).

You can also select any additional distributions you would like to enable the WSL 2 integration on.

For more details on configuring Docker Desktop to use WSL 2, see Docker Desktop WSL 2 backend.

Docker Engine

The Docker Engine page allows you to configure the Docker daemon to determine how your containers run.

Type a JSON configuration file in the box to configure the daemon settings. For a full list of options, see the Docker Enginedockerd commandline reference.

Click Apply & Restart to save your settings and restart Docker Desktop.

Command Line

On the Command Line page, you can specify whether or not to enable experimental features.

You can toggle the experimental features on and off in Docker Desktop. If you toggle the experimental features off, Docker Desktop uses the current generally available release of Docker Engine. Final cut pro mac app store.

Experimental features

Experimental features provide early access to future product functionality.These features are intended for testing and feedback only as they may changebetween releases without warning or can be removed entirely from a futurerelease. Experimental features must not be used in production environments.Docker does not offer support for experimental features.

For a list of current experimental features in the Docker CLI, see Docker CLI Experimental features.

Run docker version to verify whether you have enabled experimental features. Experimental modeis listed under Server Adobe photoshop 2020 patcher windows. data. Sonos controller mac 10.6.8 download. If Experimental is true, then Docker isrunning in experimental mode, as shown here:

Kubernetes

Note

The Kubernetes tab is not available in Windows container mode.

Docker Desktop includes a standalone Kubernetes server that runs on your Windows machince, sothat you can test deploying your Docker workloads on Kubernetes. To enable Kubernetes support and install a standalone instance of Kubernetes running as a Docker container, select Enable Kubernetes.

For more information about using the Kubernetes integration with Docker Desktop, see Deploy on Kubernetes.

Reset

The Restart Docker Desktop and Reset to factory defaults options are now available on the Troubleshoot menu. For information, see Logs and Troubleshooting.

Troubleshoot

Visit our Logs and Troubleshooting guide for more details.

Log on to our Docker Desktop for Windows forum to get help from the community, review current user topics, or join a discussion.

Log on to Docker Desktop for Windows issues on GitHub to report bugs or problems and review community reported issues.

For information about providing feedback on the documentation or update it yourself, see Contribute to documentation.

Switch between Windows and Linux containers

From the Docker Desktop menu, you can toggle which daemon (Linux or Windows)the Docker CLI talks to. Select Switch to Windows containers to use Windowscontainers, or select Switch to Linux containers to use Linux containers(the default).

For more information on Windows containers, refer to the following documentation:

Microsoft documentation on Windows containers.

Build and Run Your First Windows Server Container (Blog Post)gives a quick tour of how to build and run native Docker Windows containers on Windows 10 and Windows Server 2016 evaluation releases.

Getting Started with Windows Containers (Lab)shows you how to use the MusicStoreapplication with Windows containers. The MusicStore is a standard .NET application and,forked here to use containers, is a good example of a multi-container application.

To understand how to connect to Windows containers from the local host, seeLimitations of Windows containers for localhost and published ports

Settings dialog changes with Windows containers

When you switch to Windows containers, the Settings dialog only shows those tabs that are active and apply to your Windows containers:

If you set proxies or daemon configuration in Windows containers mode, theseapply only on Windows containers. If you switch back to Linux containers,proxies and daemon configurations return to what you had set for Linuxcontainers. Your Windows container settings are retained and become availableagain when you switch back.

Dashboard

The Docker Desktop Dashboard enables you to interact with containers and applications and manage the lifecycle of your applications directly from your machine. The Dashboard UI shows all running, stopped, and started containers with their state. It provides an intuitive interface to perform common actions to inspect and manage containers and Docker Compose applications. For more information, see Docker Desktop Dashboard.

Docker Hub

Docker Commands Windows

Select Sign in /Create Docker ID from the Docker Desktop menu to access your Docker Hub account. Once logged in, you can access your Docker Hub repositories directly from the Docker Desktop menu.

For more information, refer to the following Docker Hub topics:

Two-factor authentication

Docker Desktop enables you to sign into Docker Hub using two-factor authentication. Two-factor authentication provides an extra layer of security when accessing your Docker Hub account.

You must enable two-factor authentication in Docker Hub before signing into your Docker Hub account through Docker Desktop. For instructions, see Enable two-factor authentication for Docker Hub.

Docker Desktop for Windows user manual. Estimated reading time: 17 minutes. Welcome to Docker Desktop! The Docker Desktop for Windows user manual provides information on how to configure and manage your Docker Desktop settings. The fastest and easiest way to get started with Docker on Windows. Docker Desktop is an application for MacOS and Windows machines for the building and sharing of containerized applications and microservices. Docker Desktop delivers the speed, choice and security you need for designing and delivering containerized applications on your desktop. I have the same thing, but I also noticed that Hyper-V has to be enabled. As in, if your copy of Windows 10 has Hyper-V, you can install it by simply enabling it because it’s already there. On Windows 10 Home, though, there is no Hyper-V to enable. I also have Windows 10 Home. The only option for Home edition users is to use Docker. Docker supports Docker Desktop on Windows for those versions of Windows 10 that are still within Microsoft’s servicing timeline. What’s included in the installer The Docker Desktop installation includes Docker Engine, Docker CLI client, Docker Compose, Notary, Kubernetes, and Credential Helper. https://luckyloading560.tumblr.com/post/653769899713380352/docker-windows-home-edition.

After you have enabled two-factor authentication:

Docker Commands Windows Server 2016

Go to the Docker Desktop menu and then select Sign in / Create Docker ID.

Enter your Docker ID and password and click Sign in.

After you have successfully signed in, Docker Desktop prompts you to enter the authentication code. Enter the six-digit code from your phone and then click Verify.

After you have successfully authenticated, you can access your organizations and repositories directly from the Docker Desktop menu.

Adding TLS certificates

You can add trusted Certificate Authorities (CAs) to your Docker daemon to verify registry server certificates, and client certificates, to authenticate to registries.

How do I add custom CA certificates?

Docker Desktop supports all trusted Certificate Authorities (CAs) (root orintermediate). Docker recognizes certs stored under Trust RootCertification Authorities or Intermediate Certification Authorities.

Docker Desktop creates a certificate bundle of all user-trusted CAs based onthe Windows certificate store, and appends it to Moby trusted certificates. Therefore, if an enterprise SSL certificate is trusted by the user on the host, it is trusted by Docker Desktop.

To learn more about how to install a CA root certificate for the registry, seeVerify repository client with certificatesin the Docker Engine topics.

How do I add client certificates?

You can add your client certificatesin ~/.docker/certs.d/<MyRegistry>:<Port>/client.cert and~/.docker/certs.d/<MyRegistry>:<Port>/client.key. You do not need to push your certificates with git commands.